Ollama, Deepseek, and Graphs

Since the release of Deepseek, many people have been asking how good the model really is.

Despite all the benchmarks, all the memes, and the concerns about potential legal action, one question remains unanswered. This question is particularly relevant to us at Cognee, where we use LLMs to transform your data into agent-ready data layers.

Creating an LLM-Powered Knowledge Graph

One of the areas we explored was how capable these models are at creating LLM-powered knowledge graphs. Creating a simple knowledge graph is relatively straightforward: we define a Pydantic model, then use an LLM (via a function call) to generate a JSON output containing the nodes and edges needed to populate that Pydantic model.

Here’s an example of a model we can populate:

Then we send some data, for example:

Below is the corresponding query we would send to the LLM to add the data to the graph:

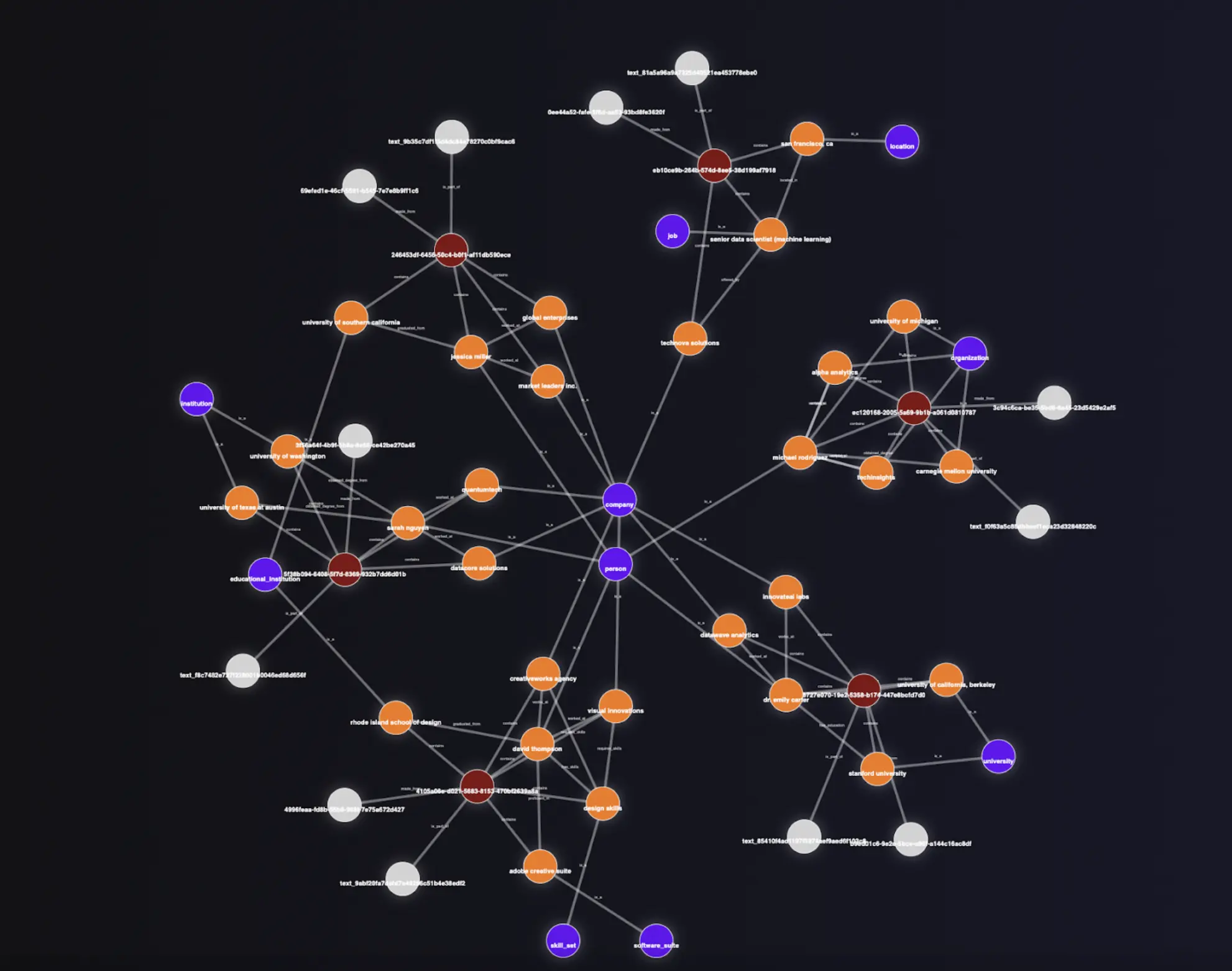

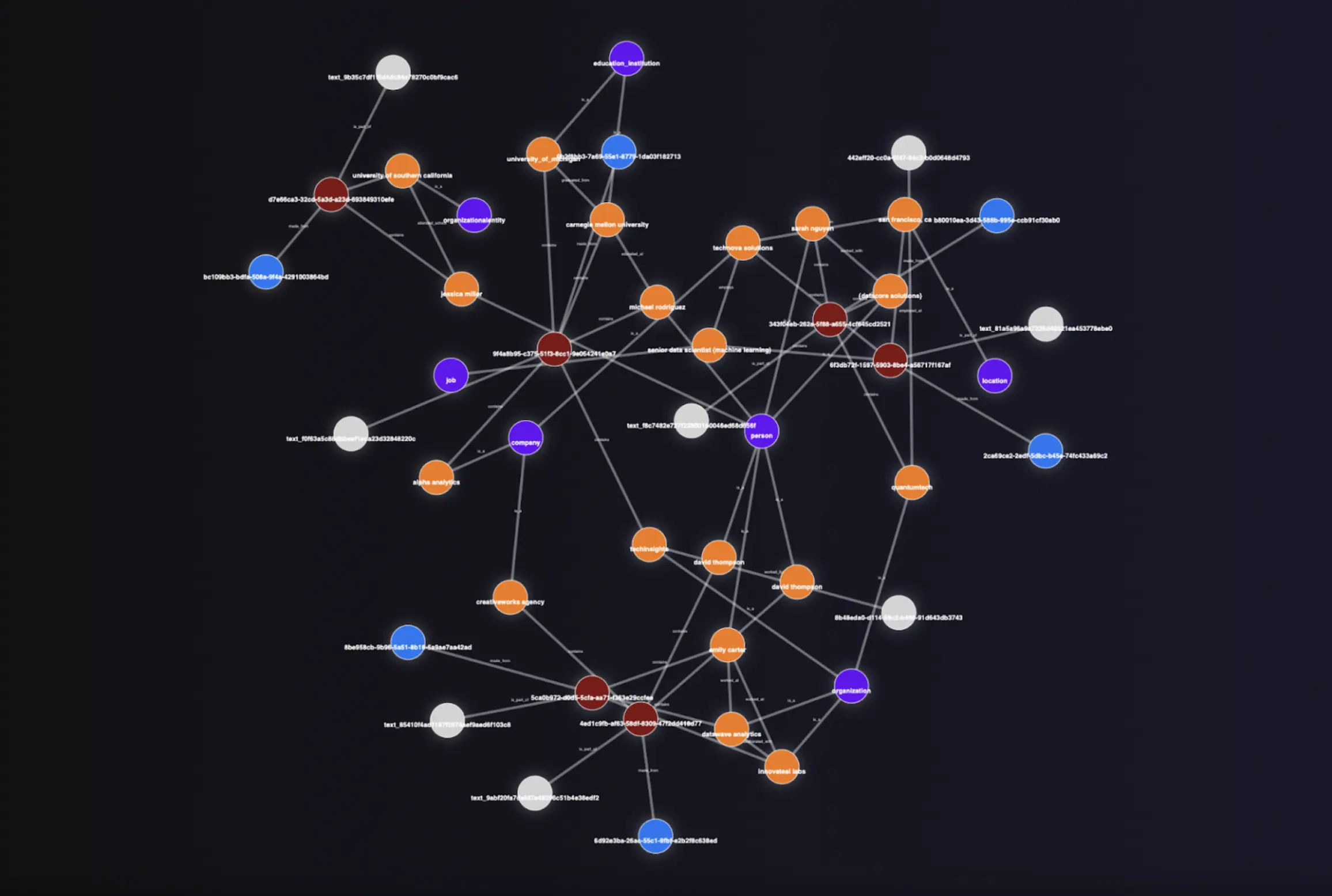

When using OpenAI to generate a graph, we get a result similar to this:

Comparing Deepseek and Other Models

We used Ollama, a free, open-source tool that lets you run large language models (LLMs) locally on your computer, to run Deepseek. However, when using Ollama with Deepseek models, we found they often struggle to generate even basic structured output. They sometimes fail to run locally and occasionally return answers in Chinese. We tried running the following models:

- Deepseek-r1:1.5b

- Deepseek-r1:7b

- Deepseek-r1:8b

To compare Deepseek with another small model, we used the latest Mistral 7B. This model performed better on a simple structured example and could return structured output. However, with Cognee, it still failed to generate our knowledge graph structure (nodes and edges). Unsurprisingly, it ended with an error during search because it didn’t extract any data, leaving the collections empty. It also sometimes fails to produce a basic string response in a structured format.

Meanwhile, the Llama 3.1 7B model is more usable and can occasionally generate the graph as well as provide answers from retrieved context.

Running the Deepseek-r1:32b model produced some surprising results. The larger model can actually create simpler graphs and, after several retries, it gives reasonable outputs. You can see the graph visualization below, which doesn’t differ much from what OpenAI generates:

Where Deepseek Stands: Key Takeaways for LLM-powered Knowledge Graphs

Our conclusion is that smaller models still aren’t quite there. While larger Deepseek models can produce impressive results, they aren’t yet reliable enough for our team’s needs. However, as model sizes increase—and as we move away from distilled models—the Ollama–Deepseek combination shows promise, and other models also perform better at generating graphs.

In the follow up blog, we will run some evaluations an show the difference in answers quality between different models.

If you’d like to try it yourself, please refer to our documentation.

You can find more information about Ollama here.

You can also join the Ollama Discord to learn more.

Finally, cognee has a vibrant Discord community—join us to get your questions answered, learn, and share your insights!

Claude Agent SDK × cognee: Persistent Memory via MCP (Without Prompt Bloat)

n8n × cognee: Add AI Memory to Any Workflow Automation