cognee + LlamaIndex: Building Powerful GraphRAG Pipelines

Connecting external knowledge to large language models (LLMs) and retrieving it efficiently is a significant challenge for developers and data scientists. Integrating structured and unstructured data into AI workflows often requires navigating multiple tools, complex pipelines, and time-consuming processes.

Enter cognee, a powerful framework for knowledge and memory management, and LlamaIndex, a versatile data integration library. Together, they enable us to transform retrieval-augmented generation (RAG) pipelines into GraphRAG pipelines, streamlining the path from raw data to actionable insights.

In this post, we’ll run through a demo that leverages cognee and LlamaIndex to create a knowledge graph from a LlamaIndex document, process it into a meaningful structure, and extract useful insights. By the end, you’ll see how these tools can give you new insights into your data by unifying various data sources into one comprehensive semantic layer you can analyze.

RAG - Recap

RAG enhances LLMs by integrating external knowledge sources during inference. It achieves this by turning the data into a vector representation and storing it in a vector database.

Key Benefits of RAG:

- Connects domain-specific data to LLMs

- Reduces costs

- Delivers higher accuracy than base LLMs.

However, building a RAG system also comes with its challenges: handling diverse data formats, managing data updates, creating a robust metadata layer, and getting retrievals of mediocre accuracy.

Introducing Cognee and LlamaIndex

Cognee simplifies knowledge and memory management for LLMs, while LlamaIndex facilitates seamless integration between LLMs and structured data sources, enabling agentic use cases.

Why Cognee?

Cognee draws inspiration from the human mind and higher cognitive functions, emulating the way we construct mental maps of the world and create a semantic understanding of objects, concepts, and relationships in our everyday lives.

Our framework translates this approach into code, allowing developers to build semantic layers that represent knowledge in formalized ontologies—structured depictions of information stored as graphs.

This lets them create modular connections between their knowledge systems and the LLMs, applying best data engineering practices while also choosing from a range of LLMs and vector and graph stores.

Cognee + LlamaIndex = ?

Together, cognee and LlamaIndex can:

- Transform unstructured and semi-structured data into graph or vector representations

- Enable domain-specific ontology generation, making unique graphs for every vertical

- Provide a deterministic layer for LLM outputs, ensuring consistent and reliable results.

Step-by-Step Demo: Building a RAG System with Cognee and LlamaIndex

In this section, we’ll walk through a complete demo that showcases how to use cognee and LlamaIndex to create and interact with a knowledge graph. By following along, you’ll gain hands-on experience in transforming raw textual data into actionable insights using a GraphRAG pipeline. You can find the notebook here.

1. Setting Up the Environment

Install necessary dependencies in your local environment:

Start by importing the required libraries and defining the environment in Python:

Ensure you’ve set up your API keys and installed the necessary dependencies.

2. Preparing the Dataset

We’ll use a brief profile of an individual as our sample dataset:

3. Initializing CogneeGraphRAG

Instantiate the cognee framework with configurations for LLM, graph, and database providers:

4. Adding Data to Cognee

Load the dataset into the cognee framework:

This step prepares the data for graph-based processing.

5. Processing Data into a Knowledge Graph

Transform the data into a structured knowledge graph:

The graph now contains nodes and relationships derived from the dataset, creating a powerful structure that can be explored.

6. Performing Searches

Unlike traditional RAG, GraphRAG enables a global view of the dataset, ensuring more comprehensive and accurate results. Below are examples of performing searches using both the knowledge graph and RAG approaches.

- Answer prompt based on knowledge graph approach:

Using the graph search above gives the following result:

- Answer prompt based on RAG approach:

Using the RAG search above gives the following result:

The results demonstrate a significant advantage of the knowledge graph-based approach (GraphRAG) over the RAG approach.

Because it’s able to aggregate and infer information from a global context, GraphRAG successfully identified all the mentioned individuals across multiple documents. In contrast, the RAG approach was limited to identifying individuals within a single document due to its chunking-based processing constraints.

This highlights GraphRAG’s superiority in comprehensively resolving queries that span across a broader corpus of interconnected data.

7. Finding Related Nodes

Explore relationships in the knowledge graph:

Why Choose Cognee and LlamaIndex?

1. Synergized Agentic Framework and Memory

Your agents are empowered with long-term and short-term memory, along with domain-specific memory tailored to their unique contexts.

2. Enhanced Querying and Insights

Your memory can now automatically self-optimize over time, outputting more accurate and insightful responses to complex queries.

3. Simplified Deployment

The framework is seamlessly deployed with ready-to-use standard tools, eliminating the need for extensive setup and allowing you to focus on rapid result delivery.

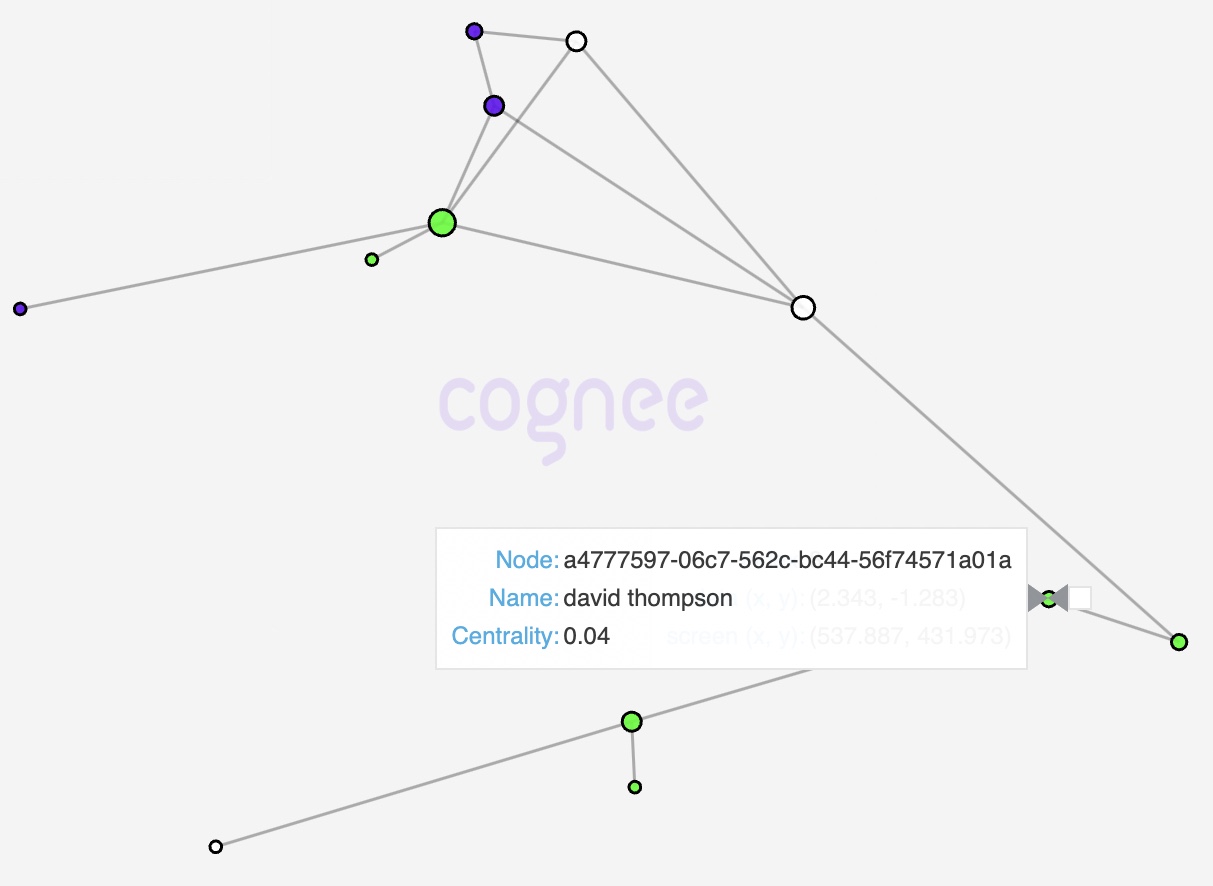

Visualizing the Knowledge Graph

Imagine a graph structure where each node represents a document or entity, and edges indicate relationships. These edges may capture nuanced connections, such as hierarchical relationships, co-references, or temporal sequences. The result is a visual representation that brings your data to life, helping you uncover patterns and insights that are otherwise hidden in text.

For instance, in the example above, you might visualize Jessica Miller and David Thompson as nodes, with edges linking them to attributes like "profession" or "experience." This visual map not only simplifies data exploration but also aids in validating relationships for further refinement.

Here’s the visualized knowledge graph from the example above:

Unleash the Potential of GraphRAG

From unifying diverse data sources to enabling consistently accurate insights, cognee and LlamaIndex pave the way toward efficient and intelligent knowledge management and data integration.

If you’re interested in harnessing the limitless potential of this synergized framework, try running it yourself on google colab with our detailed demo, and see how these tools could simplify your AI pipelines while delivering meaningful results. While you’re at it, join the cognee community to connect with other developers, share your feedback, and stay updated on the latest advancements in GraphRAG and knowledge management frameworks.

Let’s start transforming data into intelligence.

Context Graphs: Why Agent Memory Needs World Models and Behavioral Validation

Claude Agent SDK × cognee: Persistent Memory via MCP (Without Prompt Bloat)