LLM Memory: Integration of Cognitive Architectures with AI

In the quickly evolving world of generative AI, Large Language Models (LLMs) often steal the spotlight. Yet, just as a human mind relies on memory to retain facts and contextual knowledge, LLMs can benefit from memory systems that allow them to store, recall, and manage information.

Below, we’ll explore the concept of AI memory, explain its role in improving LLM performance, and highlight how platforms like cognee aim to build robust data pipelines that bring us closer to production-ready AI data infrastructure.

What is LLM Memory?

When we talk about “LLM memory,” we’re referring to the architecture and methods that enable AI systems to access and retain information over time.

Typically, an LLM will only keep track of data within the window of the specific query - anything beyond that window is lost unless reintroduced. The model answers a prompt and then immediately “forgets” what happened during the interaction. Memory-augmented LLMs, on the other hand, leverage additional data stores and cognitive-like processes to remember facts, user preferences, and previous actions.

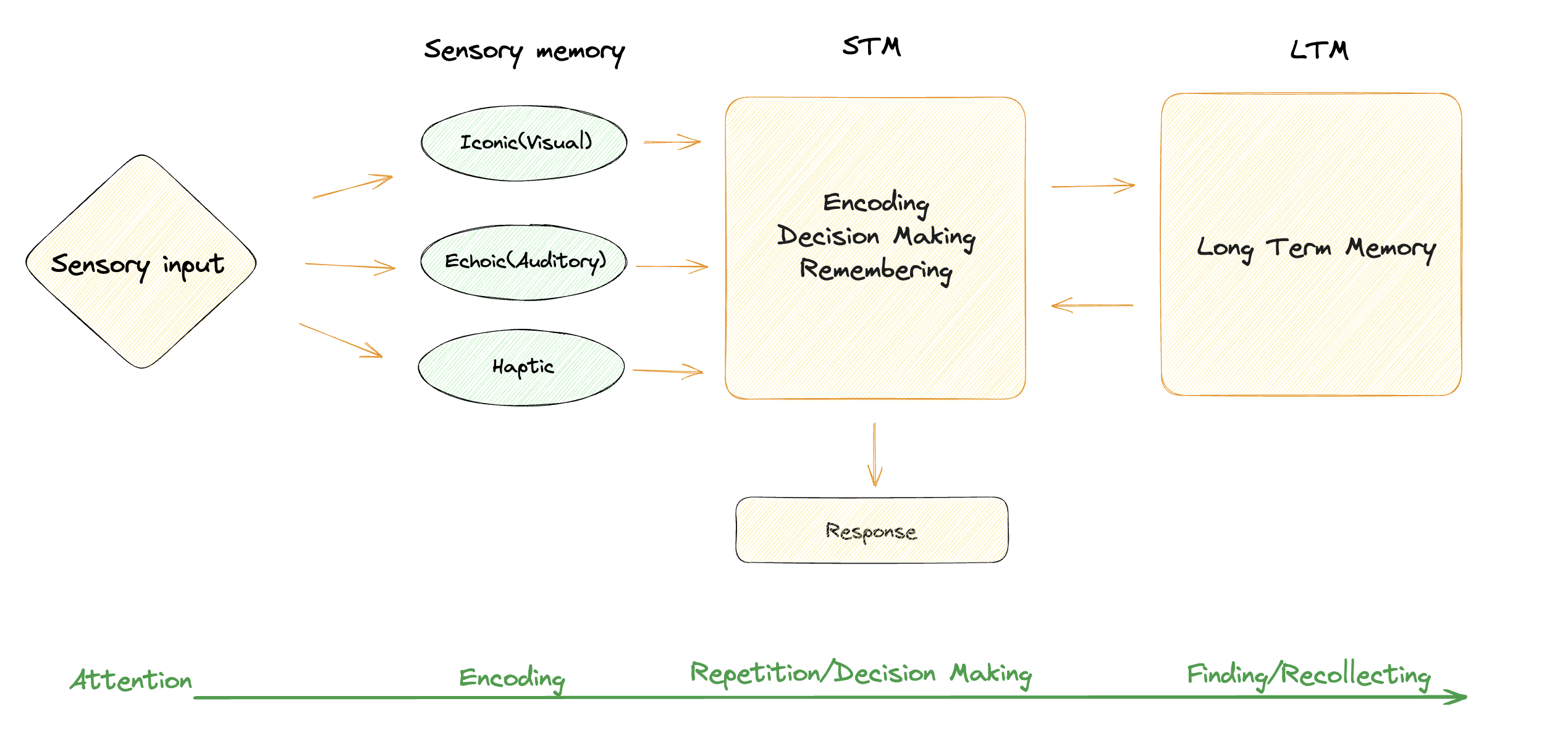

This concept of memory in AI has many parallels to the human mind. In general, the goal is to preserve context so the model can maintain accuracy and personalization over multiple steps or sessions. In our very first blog series, we showcased a representation of memory for our context and the corresponding data processing as follows:

- Sensory Memory (SM): In humans, sensory memory refers to the information we grasp through our senses (visual, auditory, or otherwise) within the first few seconds of perception, after which it’s either discarded or moved to short-term memory. In LLM systems, SM corresponds to an API request or prompt being fed into the system.

- Short-Term Memory (STM): Human short-term memory (also called working memory) holds and manipulates a small amount of information in an active state. In LLMs, STM can be thought of as handling the input - the text tokens or embeddings currently available for prompt processing - within the immediate context window. Once this session ends, the model usually “loses” that context.

- Long-Term Memory (LTM): In humans, long-term memory retains knowledge, experiences, and skills over time. Long-term memory is divided into explicit (declarative) memory, which is conscious and includes episodic memory (life events) and semantic memory (facts and concepts), and implicit (procedural) memory, which is unconscious and encompasses skills and learned tasks. For LLMs, LTM can be implemented through external databases, vector stores, or graph structures that keep relevant data available and allow the model to “recall” information in future queries.

Types of AI Memory Architectures

1. LLM Short-Term Memory

Short-term memory in AI deals with immediate context windows. Most popular LLM APIs (e.g., GPT-4, Claude, Cohere) offer a limited prompt size to process an incoming user query. This context window is ephemeral - it disappears once the model completes the response. If you need to preserve essential details across sessions, you need an external memory component.

2. LLM Long-Term Memory

Long-term memory refers to the external systems that store data beyond a single chat session or prompt. Vector databases and graph databases enable LLMs to go beyond transient chat memory. By converting information into vectors, data can be represented in a numerical format that captures relationships and patterns, making it easier for AI models to process, compare, and retrieve relevant information efficiently. Similarly, graph databases organize data into nodes and edges, highlighting the relationships between concepts, which provides a more intuitive and interconnected structure than raw data storage.

By storing and retrieving vector embeddings, an AI application can “remember” details about users or large-scale document repositories. Systems like cognee also introduce hierarchical relationships (displayed visually, in a user-friendly graph) for more sophisticated data representation, emulating the dense interconnectedness of concepts found in human semantic networks.

Importance of Memory in AI

As AI systems grow more sophisticated, the integration of memory becomes essential. Memory-augmented architectures enable these systems to not only process immediate inputs but also retain and leverage context from previous interactions, driving advancements in personalization, accuracy, and efficiency. Below are some key reasons why memory integration is important for AI systems:

1. Context-Rich Responses

Traditional LLMs are stateless, meaning they process each prompt in isolation without retaining previous context. This limitation can be addressed by integrating memory systems that provide continuity across interactions. Memory-augmented LLMs, however, can tap into both short-term context and long-term data to give you deeper, more personalized responses.

2. Reduced Hallucinations

Hallucinations typically occur when LLMs try to fill the gaps in their retrievals without actually having the relevant knowledge at their disposal. By anchoring their responses in stored facts - a technique known as retrieval-augmented generation (RAG) - memory systems help mitigate these errors. Implementing RAG is challenging due to advanced data handling requirements, but its limitations are being addressed through approaches such as Graph RAG, which leverages graph-based structures to improve retrieval accuracy and scalability. Effective LLM memory implementation is crucial for minimizing these hallucinations and ensuring factual accuracy.

3. Efficient Data Handling

Manually reviewing large volumes of documents (e.g., PDFs, financial statements) is time-consuming and error-prone. A memory-augmented LLM pipeline can automatically ingest, categorize, and store data, then retrieve it on demand - speeding up workflows in various industries. This architecture allows for targeted retrieval and cuts down on redundant API calls or database queries, which in turn lowers computational costs and improves efficiency.

4. Step Towards Self-Evolution

Integrating LTM into AI systems is a crucial step toward enabling LLMs to evolve their inference ability. Much like human learning, this integration allows AI models to adapt to new tasks with limited data or interactions, enhancing their performance and applicability in real-world scenarios.

Challenges in AI Memory Integration

1. Data Engineering Complexity

Many current AI projects function as “thin wrappers” around a powerful LLM API, meaning they provide limited additional functionality beyond simply interfacing with the underlying model. But to ensure that the system can emulate cognitive-like memory by effectively storing and recalling information as needed, robust data engineering practices, such as containerization, data contracts, schema management, and vector indexing, are required. A well-designed LLM memory architecture requires significant engineering effort but delivers substantial improvements in overall system performance.

2. Interoperability & Scaling

Selecting a single database or memory store isn’t always enough. Large deployments often need relational databases, vector stores, and graph databases working in tandem. Multi-turn or multi-agent systems create a bigger challenge: the memory must be shared and updated across potentially hundreds (or thousands) of LLM interactions. This intensifies the need for structured data and cohesive memory management.

3. Reliability & Testing

Memory-augmented AI systems introduce more variables; chunk sizes, embedding models, retrieval strategies, or search methods. Structured testing frameworks help developers iterate on these parameters and measure improvements in a systematical way.

4. Privacy & Governance

Long-term user data storage raises privacy and compliance concerns. Access controls, encryption, and ethical guidelines must be enforced, ensuring the AI memory system remains a valuable resource rather than a liability.

Recent Advancements in AI Memory

LLM Conversational Memory

Modern LLMs offer partial “conversation history” by retaining context within a session. However, this is typically constrained by the prompt window size, meaning earlier interactions may be truncated or lost entirely. Memory-enhanced systems go further by capturing context from hundreds or thousands of past messages or uploaded documents. This is critical for sophisticated multi-turn interactions.

LLM Memory Management

Memory managers coordinate how data is chunked, retrieved, and combined for a response. By modeling different “memory domains” (e.g., short-term vs. long-term, factual vs. episodic), the system can strategically filter out noise and focus on the right data, much like the selective attention processes in human cognition.

Memory Tuning

Developers can configure chunk sizes, overlap, indexing types, and retrieval methods (semantic search, keyword, or hybrid). Tuned correctly, these parameters reduce computational costs and boost accuracy.

Applications of Memory-Enhanced LLMs

1. Enterprise Document Processing

Large-scale PDF analysis—such as invoices, contracts, or legal documents—can be dramatically accelerated. Memory-augmented LLMs systematically store and retrieve relevant clauses or invoice data, minimizing the need for manual data entry.

2. Conversational Agents for Customer Support

Agents equipped with memory can recall a user’s past interactions, preferences, and account details, enabling hyper-personalized customer service.

3. Intelligent Research Assistants

LLMs that can ingest entire textbooks and research papers are able to answer nuanced questions by referencing highly relevant text chunks and consolidating concepts from multiple sources.

The Future of AI Memory Systems

Looking ahead, the AI community is experimenting with:

- Graph-Based Context Storage: Storing knowledge as interconnected nodes and relationships enables more human-like semantic reasoning, bridging separate pieces of data to form new conclusions.

- Adaptive Memory: Dynamically reorganizing memory depending on usage frequency or recency, mirroring how the human brain prioritizes valuable memories.

- Agent Networks: Multiple specialized AI agents, each with its own memory stores, can coordinate to solve large, multi-step tasks, eventually functioning like collaborative “teams” of digital workers.

- Personalized Model Construction: The ongoing research focuses on utilizing LTM data to build personalized models that can adapt to individual user needs and preferences. This personalization is expected to enhance user experience and improve the effectiveness of AI applications across various domains.

Why Long-Term and Short-Term Memory Might Not Be the Best Way Forward

While the framework of short-term and long-term memory has provided a helpful analogy for AI systems, it is simplistic when applied to the complexities of machine cognition. Human memory operates within a multilayered, dynamic network of associations, rather than discrete bins of short and long-term storage. Similarly, research on multilayer networks in complex systems emphasizes the importance of interconnected structures for managing information.

Rigid distinctions between memory types can miss the nuanced interrelations of data, which may be better suited to hybrid, hierarchical systems A multilayer network perspective encourages us to think beyond simple timelines and instead focus on relationships, frequency, and context, all of which could enhance how memory systems are engineered in AI.

As AI continues to evolve, incorporating more sophisticated frameworks inspired by multilayered models could allow for better adaptability, efficiency, and scalability, challenging the binary notion of short-term vs. long-term memory.

Wrapping It Up

Proper memory architecture may be the missing puzzle piece for AI systems striving to be truly production-ready. When effectively engineered, AI applications and agents become more accurate, context-aware, and trustworthy.

cognee integrates advanced memory systems with robust, production-grade data platforms; handling everything from ingestion to analytics, from ephemeral short-term memory to stable, reliable long-term knowledge repositories.

Interested in learning more about bridging data engineering with cognition-inspired AI? Check out our Github to see how advanced memory architectures can transform LLMs into real-world solutions and join our Discord community to keep up with our updates and participate in discussions!

FAQs

-

What is LLM memory?

LLM memory refers to how Large Language Models store, manage, and retrieve information. Rather than resetting after every user query, memory-augmented LLMs maintain additional context via data structures (e.g., vector or graph stores) to provide more coherent, long-lived interactions.

-

How does memory enhance LLM functionality?

Memory systems reduce hallucinations, improve context retention, and enable AI to handle large and evolving datasets - thus enabling more reliable and sophisticated responses.

-

What are the types of memory used in AI?

AI memory commonly splits into short-term memory (context window for the current prompt) and long-term memory (external storage, usually in a database or vector store, for persistent knowledge).

-

What challenges exist in AI memory systems?

Challenges include implementing robust data engineering methods, ensuring interoperability across various databases, maintaining performance at scale, protecting privacy, and establishing reliable testing frameworks.

-

How will AI memory evolve in the future?

Future improvements focus on adaptive memory, graph-based knowledge storage, more nuanced attention mechanisms, and the orchestration of multiple specialised AI agents working in tandem.

-

Does AI have memory?

Traditional AI models don’t exhibit human-like memory processes. However, memory-augmented systems replicate certain aspects of human short-term and long-term memory through external data structures and cognitively inspired processes.

Stay in Touch

We’d love to hear from you - follow us on social media, join our Discord channel, and check out cognee on GitHub. Don’t forget to give us a 🌟 if you like what we’re doing!

Context Graphs: Why Agent Memory Needs World Models and Behavioral Validation

Claude Agent SDK × cognee: Persistent Memory via MCP (Without Prompt Bloat)